Cristián Vogel is on his way to the music conservatory in Aalborg, Denmark to present a few days of composition and sound design workshops.

He writes of Kyma: “Effortless to set up on a train, wonderful I can keep working on my NEL VCS Player…”

Official organ of the Symbolic Sound Corporation

Cristián Vogel is on his way to the music conservatory in Aalborg, Denmark to present a few days of composition and sound design workshops.

He writes of Kyma: “Effortless to set up on a train, wonderful I can keep working on my NEL VCS Player…”

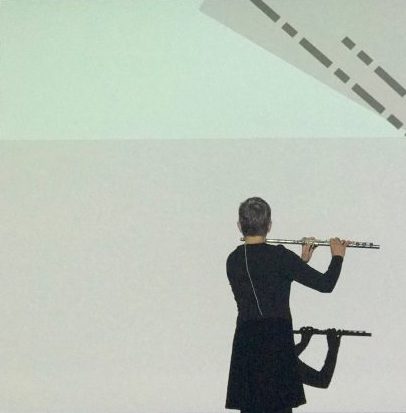

Composer/performer Anne La Berge was the featured artist for the 2025 SPLICE Institute — an annual Institute designed for performers and composers to study the integration of performance with electronics where she presented several performances, worked with student composers, and was invited to do an introductory session on how she uses Kyma in her work.

During the conference, she became a role model for students seeking artistic careers outside of academia.

Earlier in the summer, she was featured in the Cortona Sessions for New Music (20 July – 1 August, 2025 in Ede, Netherlands) — an educational program dedicated to the creation and performance of contemporary music, and a meeting place for emerging composers and performers seeking to collaborate, learn, grow, and create.

By augmenting traditional sample-based sound design with generative models, you gain additional parameters that you can perform to picture or control with a data stream from a model world — like a game engine. Once you have a parameterized model, you can generate completely imaginary spaces populated by hallucinatory sound-generating objects and creatures.

Thanks to Mark Ali and Matt Jefferies for capturing and editing the lecture on Generative Sound Design in Kyma presented last November by Carla Scaletti for Charlie Norton’s students at the University of West London.

Franz Danksagmüller is offering a Kyma workshop next semester at the Musikhochschule Lübeck as part of a new Master’s Program in Organ Improvisation: Creativity, Innovation, and Interdisciplinarity – a course of study offering creative, cutting-edge musicians an opportunity to develop their artistic personalities through innovative improvisation, composition, electronic instrument and controller design, creative AI for improvisation and composition, generative video and sound design, and Kyma for live electronic performances.

Recently featured on NDR news, the groundbreaking program brings the art of organ improvisation into the 21st century and introduces the organ to artists from other disciplines. The focus is on current styles and techniques and on combining the organ with contemporary trends and modern media. Students develop individual forms of expression and acquire the skills necessary to realize innovative musical and live digital media projects and collaborations.

Closely aligned with the “Sound Arts and Creative Music Technology” degree program, the new degree includes courses in:

Through a collaboration with St. Nikolai church in Hamburg, students gain access to an innovative hyper-organ, where they can learn microphone placement for processing the organ sound, handle MIDI connections and electronic platforms, and use the organ as an interface for interactive and multimedia projects. The program also maintains close partnerships with the Orgelpark in Amsterdam — renowned for its pioneering work in merging tradition and modern technology — and the experimental organ at St. Martin’s Church in Kassel —known for its quarter-tone manual, wind regulation options, and overtone registers, offering additional ways to explore contemporary improvisation techniques and soundscapes.

Thanks to close collaborations with universities and institutions in Lübeck and Hamburg, as well as partnerships with international festivals, students benefit from extensive practical experience and networking opportunities. Collaboration with students from other disciplines is particularly encouraged at MHL.

Numerous partnerships with various festivals (including the Nordic Film Days Lübeck, the largest film festival in Northern Europe) and major churches in Northern Germany provide students with the opportunity to present their work to a broader audience.

The Master’s Program in Organ Improvisation: Creativity, Innovation, and Interdisciplinarity at Musikhochschule Lübeck combines tradition with innovation and opens doors to a new direction in musical creation, positioning the organ as a central interface for artistic expression.

For more details and to find out how to apply, visit:

https://www.mh-luebeck.de/de/studium/studiengaenge/master-of-music-orgel-improvisation/

At the IRCAM Forum Workshops @Seoul 6-8 November 2024, composer Steve Everett presented a talk on the compositional processes he used to create FIRST LIFE: a 75-minute mixed media performance for string quartet, live audio and motion capture video, and audience participation.

FIRST LIFE is based on work that Everett carried out at the Center of Chemical Evolution, a NSF/NASA funded project at multiple universities to examine the possibility of the building blocks of life forming in early Earth environments. He worked with stochastic data generated by Georgia Tech biochemical engineer Martha Grover and mapped them to standard compositional structures (not as a scientific sonification, but to help educate the public about the work of the center through a musical performance).

Data from IRCAM software and PyMOL were mapped to parameters of physical models of instrumental sounds in Kyma. For example, up to ten data streams generated by the formation of monomers and polymers in Grover’s lab were used to control parameters of the “Somewhat stringish” model in Kyma (such as delay rate, BowRate, position, decay, etc). Everett presented a poster about this work at the 2013 NIME Conference in Seoul, and has uploaded some videos from the premiere of First Life at Emory University.

Currently on the music composition faculty of the City University of New York (CUNY), Professor Everett is teaching a doctoral seminar on timbre in the spring (2025) semester and next fall he will co-teach a course on music and the brain with Patrizia Casaccia, director of the Neuroscience Initiative at the CUNY Advanced Science Research Center.

A highlight of this year’s Cortona Sessions for New Music will be Special Guest Artist, Anne La Berge. Known for her work blending composed and improvised music, sound art, and storytelling, Anne will be working closely with composers and will be coaching performers on improvisation with live Kyma electronics!

The Cortona Sessions for New Music is scheduled for 20 July – 1 August 2025 in Ede, Netherlands, and includes twelve days of intensive exploration of contemporary music, collaboration, and discussions on what it takes to make a career as a 21st-century musician.

Anne is eager to work with instrumentalists and composers looking to expand their solo or ensemble performances through live electronics, so if you or someone you know is interested in working with Anne this summer, consider applying for the 2025 Cortona Sessions!

Applications are open now (Deadline: 1 February 2025). You can apply as a Composer, a Performer, or as a Groupie (auditor). A full-tuition audio/visual fellowship is available for applicants who can provide audio/visual documentation services and/or other technological support.

At the invitation of UWL Lecturer Charlie Norton, Carla Scaletti presented a lecture/demonstration on Generative Sound Design in Kyma for students, faculty and guests at University of West London on 14 November 2024. As an unanticipated prelude, Pete Townshend (who, along with Joseph Townshend, works extensively with Kyma) welcomed the Symbolic Sound co-founders to his alma mater and invited attendees to tour the Townshend Studio following the lecture.

It seems that anywhere you look in the Townshend Studio, you see another rock legend. John Paul Jones (whose most recent live Kyma collaborations include Sons of Chipotle, Minibus Pimps, and Supersilent among others) recognized an old friend from across the room: a Yamaha GX-1 (1975), otherwise known as ‘The Dream Machine’ — the same model JPJ played when touring with Led Zeppelin and when recording the 1979 album “In Through The Out Door”. Yamaha’s first foray into synthesizers, only 10 were ever manufactured; it featured a ribbon controller and a keyboard that could also move laterally for vibrato. Other early adopters included ELP, Stevie Wonder and Abba.

Did you know that you could study for a degree in sound design and work with Kyma at Carnegie Mellon University? Joe Pino, professor of sound design in the School of Drama at Carnegie Mellon University, teaches conceptual sound design, modular synthesis, Kyma, film sound design, ear training and audio technology in the sound design program.

Sound design works in the spaces between reality and abstraction. They are less interesting as a collection of triggers for giving designed worlds reality. They are more effective when they trigger emotional responses and remembered experiences.

There are so many ways to learn Kyma (the online documentation, asking questions in the Kyma Discord, working with a private coach or group of friends…). This semester there are also two new university courses where, not only can you can learn Kyma, you’ll also have a chance to work on creative projects with a composer/mentor and interact with fellow Kyma sound designers in the studio while also earning credit toward a degree.

At Haverford, Bryn Mawr, and Swarthmore, you can sign up for MUSC H268A Sonic Narratives – Storytelling through Sound Synthesis with Professor Mei-ling Lee.

In “Sonic Narratives” you’ll learn to combine traditional instruments and electronic music technologies to explore storytelling through sound. Treating the language of sound as a potent narrative tool, the course covers advanced sound synthesis techniques such as Additive, Subtractive, FM, Granular, and Wavetable Synthesis using state-of-the-art tools like Kyma and Logic Pro. Beyond technical proficiency, students will explore how these synthesis techniques contribute to diverse fields, from cinematic soundtracks to social media engagement.

2,332 miles (3 753 kms) to the west, Professor Garth Paine is offering a Kyma course at the ASU Herberger Institute for Design and the Arts in Tempe, Arizona.

From the course catalog: The Kyma System is an advanced real-time sound synthesis and electro-acoustic music composition and performance software/hardware instrument. It is widely used in major film sound design studios, by composers across the globe and in scientific sound sonification. The Kyma system is a patcher like environment which can also be scripted and driven externally by OSC and MIDI. Algorithms can be placed in timelines for dynamic instantiation based on musical events or in grids and as fixed patches. The system has several very powerful FFT and spectral processing approach which can also be used live. In this class, learn about the potential of the system and several of the ways in which it can be used in creating innovative sound design and live electronics with instruments. The class is focused on students who are interested in electroacoustic music composition and realtime performance and more broadly in sound design.

These are not the only institutions of higher learning where Kyma knowledge is on offer this fall. Here’s a sampling of some other schools offering courses where you’ll learn to apply Kyma skills to sound design, composition, and data sonification:

If you are teaching a Kyma course this year, and don’t see yourself on the list, please let us know.

Franz Danksagmüller was in Waltershausen 25-28 August 2024 presenting workshops on how to integrate live electronics with the pipe organ. Here’s a photo of “the hand” controller designed by Franz, alongside an Emotiv EEG headband and Kyma Control.

Although from this vantage point, one might think that the organ loft is nearly paradisiacal…

…the organ builder took pains to remind the organist of the alternative (or at the very least, to have a laugh).