Kyma 7 now offers plug-and-play support for Roger Linn Design’s LinnStrument and other MPE-enabled MIDI instruments. Kyma automatically puts the LinnStrument into MPE mode when you connect it via USB-MIDI or MIDI 5-pin DIN (or via your computer, using Delora Software’s Kyma Connect). Once connected, any keyboard-controlled Sound in Kyma automatically sets the polyphony and responds to the LinnStrument — no extra controllers are needed, and you don’t have to select a special mode on the LinnStrument — so you just plug it in, and play.

What is MPE?

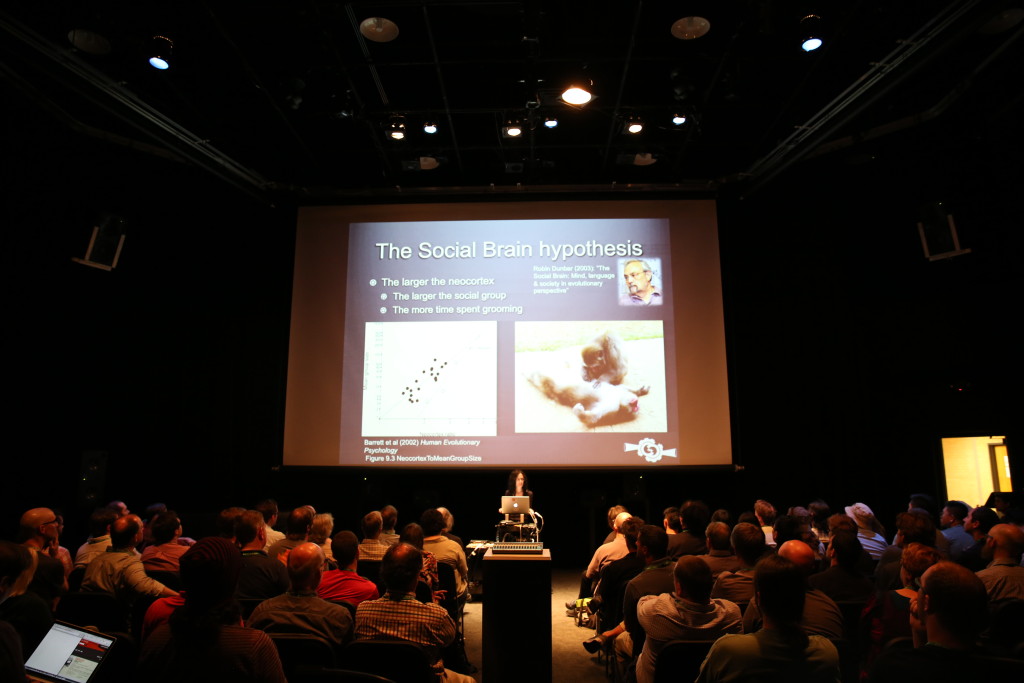

Traditional MIDI note events have two dimensions — pitch and velocity — neither of which can be altered directly with the fingers once the key has gone down. But musicians performing with live electronics are driving the demand for new electronic instruments — instruments whose touch, reliability, sensitivity, and responsiveness can begin to approach those of traditional acoustic instruments.

Over the last 10-15 years, more and more instrument makers have sought to incorporate continuous control over pitch and velocity and to add a third dimension of continuous control: timbre. One of the earliest entries in this new category was the Continuum fingerboard from Haken Audio (which has had plug-and-play support in Kyma since 2001). More recently, Madrona Labs (Soundplane), Eigenlabs (Eigenharp), ROLI (Seaboard), and Roger Linn Design (LinnStrument) have been offering “keyboard-like” instruments that provide three dimensions of expressive, continuous control per finger.

But how is it possible to send these three-dimensional continuous polyphonic MIDI notes to a sound engine? Haken Audio first used a FireWire protocol before switching over to a proprietary, optimized MIDI protocol. Symbolic Sound and Madrona Labs used Open Sound Control (OSC) for Kyma Control and Soundplane, respectively. But the growing proliferation of new instruments and proprietary protocols was threatening to become a nightmare for soft-and-hardware synthesizer makers to support.

Enter software developer Geert Bevin who, in January of this year, started working with key industry professionals on a new, more expressive MIDI specification called MPE: Multidimensional Polyphonic Expression. The new MPE standard has already been implemented on Roger Linn Design’s LinnStrument, the Madrona Labs Soundplane, the ROLI Rise Seaboard, and several other instrument makers are currently in the process of adding an MPE-mode to their instruments.

With MPE, the music industry now has a standard protocol for communicating between expressive controllers and the sound hardware and software capable of sonically expressing the subtlety, responsiveness, and live interaction offered by these controllers.

Kyma — Interactive, responsive, and live

Kyma, with its legendary audio quality, vast synthesis codebase and deep access to detailed parameter control, is the ideal sound engine to pair with these new, more responsive controller interfaces for live expressive performance, and Symbolic Sound has a long history of working with instrument makers to provide tight, seamless integration and bi-directional communication between these new instruments and Kyma.

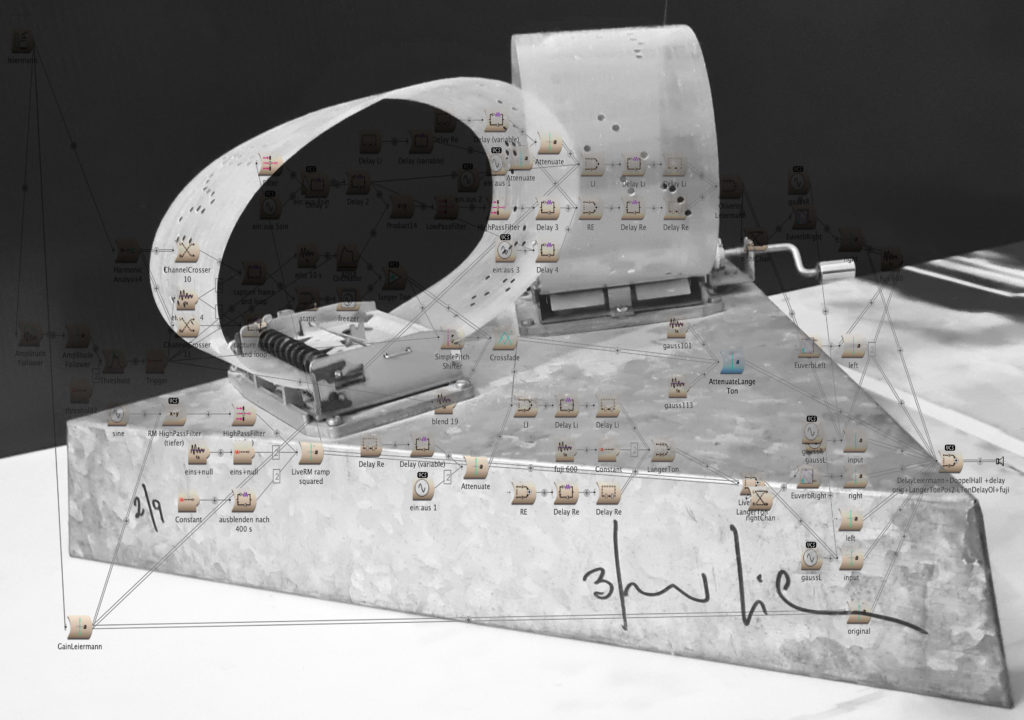

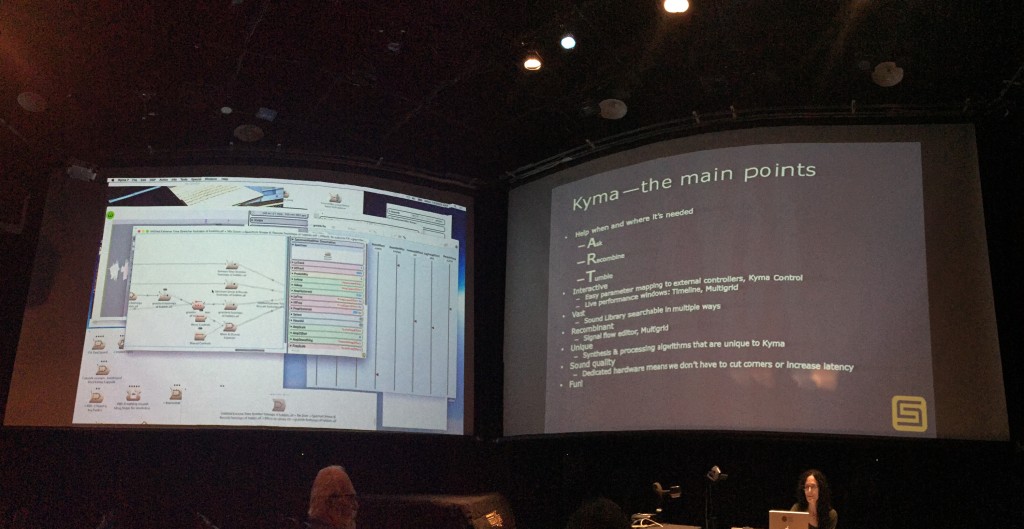

In addition to its graphical signal flow editor, file editors, and Sound Library, Kyma 7 also provides several environments in which you can create an instrument where the synthesis, processing, parameter-mapping, and even the mode of interaction can evolve over time during a performance:

- In the Multigrid (displayed on the iPad during the video), you can switch instantly between sources, effects, and combinations of the two with no interruption in the audio signal. Perform live, inspired in the moment, with infinite combinatorial possibilities.

- In the Kyma 7 Timeline you can slow down or stop the progression of time to synchronize your performance with other performers, with key events, or with features extracted from an audio signal during your performance.

- Using the Tool you can create a state machine where input conditions trigger the evaluation of blocks of code (for example, the game-of-life displayed on the LinnStrument during the closing credits of the video is being controlled by a Tool).

- Kyma also provides a realtime parameter language called Capytalk where you can make parameters depend on one another or control subsets of parameters algorithmically.

- It’s easy to add a new parameter control, simply type in the desired controller name preceded by an exclamation point — a control is automatically created for you, and it even generates its own widget in a Virtual Control Surface which can be remapped to external controllers (through MIDI, 14-bit MIDI, or OSC). This makes it easy to augment your live MPE controllers with other MIDI and OSC controllers or with tablet controller apps.

More information

Multidimensional Polyphonic Expression (MPE)

expressiveness.org

LinnStrument

rogerlinndesign.com

Kyma 7

symbolicsound.com

When Smith came across the Minirig loudspeaker — a small Bluetooth speaker typically used for small parties and “annoying people on the beach” — he bought 4 of them and, using standard microphone mounts and gooseneck microphone stands, he started experimenting with various configurations. Initially using tea coasters and cable ties, he eventually found drainpipe mounts that fit the Minirigs perfectly. Now he’s able to flexibly angle the loudspeakers toward nearby reflective surfaces (walls, windows, ceilings, panels), creating an impression of the sound coming from the room and not just the loudspeaker.

When Smith came across the Minirig loudspeaker — a small Bluetooth speaker typically used for small parties and “annoying people on the beach” — he bought 4 of them and, using standard microphone mounts and gooseneck microphone stands, he started experimenting with various configurations. Initially using tea coasters and cable ties, he eventually found drainpipe mounts that fit the Minirigs perfectly. Now he’s able to flexibly angle the loudspeakers toward nearby reflective surfaces (walls, windows, ceilings, panels), creating an impression of the sound coming from the room and not just the loudspeaker.