Generate sequences based on the patterns discovered in your MIDI files

Symbolic Sound today released a new module that generates endlessly evolving sequences based on the patterns it discovers in a MIDI file. HMMPatternGenerator, the latest addition to the library of the world’s most advanced sound design environment, is now available to sound designers, musicians, and researchers as part of a free software update: Kyma 7.25.

Composers and sound designers are masters of pattern generation — skilled at inventing, discovering, modifying, and combining patterns with just the right mix of repetition and variation to excite and engage the attention of a listener. HMMPatternGenerator is a tool to help you discover the previously unexplored patterns hidden within your own library of MIDI files and to generate endlessly varying event sequences, continuous controllers, and new combinations based on those patterns.

Here’s a video glimpse at some of the potential applications for the HMMPatternGenerator:

What can HMMPatternGenerator do for you?

Games, VR, AR — In an interactive game or virtual environment, there’s no such thing as a fixed-duration scene. HMMPatternGenerator can take a short segment of music and extend it for an indeterminate length of time without looping.

Live improvisation backgrounds — Improvise live over an endlessly evolving HMMPatternGenerator sequence based on the patterns found in your favorite MIDI files.

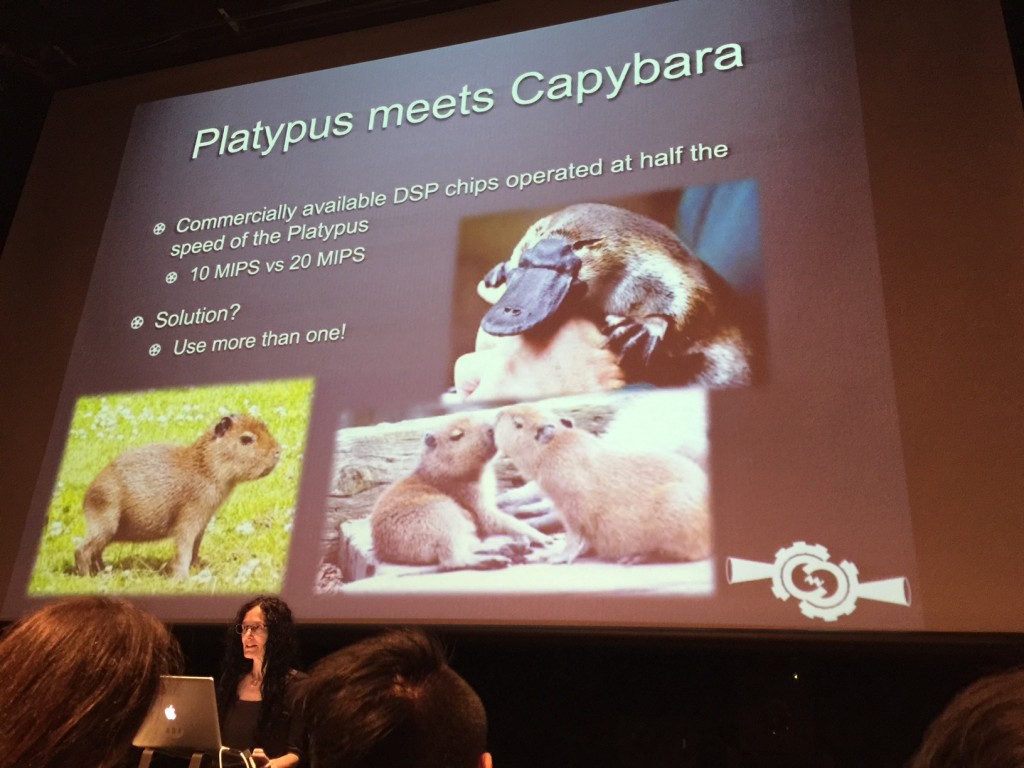

Keep your backgrounds interesting — Have you been asked to provide the music for a gallery opening, a dance class, a party, an exercise class or some other event where music is not the main focus? The next time you’re asked to provide “background†music, you won’t have to resort to loops or sample clouds; just create a short segment in the appropriate style, save it as a MIDI file, and HMMPatternGenerator will generate sequences in that style for as long as the party lasts — even after you shut down your laptop (because it’s all generated on the Paca(rana) sound engine, not on your host computer).

Inspiration under a deadline — Need to get started quickly? Provide HMMPatternGenerator with your favorite MIDI files, route its MIDI output stream to your sequencer or notation software, and listen while it generates endless recombinations and variations on the latent patterns lurking within those files. Save the best parts to use as a starting point for your new composition.

Sound for picture — When the director doubles the duration of a scene a few hours before the deadline, HMMPatternGenerator can come to the rescue by taking your existing cue and extending it for an arbitrary length of time, maintaining the original meter and the style but with continuous variations (no looping).

Structured textures — HMMPatternGenerator isn’t limited to generating discrete note events; it can also generate timeIndex functions to control other synthesis algorithms (like SumOfSine resynthesis, SampleClouds and more) or as a time-dependent control function for any other synthesis or processing parameter. That means you can use a MIDI file to control abstract sounds in a new, highly-structured way.

MIDI as code — If you encode the part-of-speech (like verb, adjective, noun, etc) as a MIDI pitch, you can compose a MIDI sequence that specifies a grammar for English sentences and then use HMMPatternGenerator to trigger samples of yourself speaking those words — generating an endless variety of grammatically correct sentences (or even artificial poetry). Imagine what other secret meanings you could encode as MIDI sequences — musical sequences that can be decrypted only when decoded by the Kyma Sound generator you’ve designed for that purpose.

Self-discovery — HMMPatternGenerator can help you tease apart what it is about your favorite music that makes it sound the way it does. By adjusting the parameters of the HMMPatternGenerator and listening to the results, you can uncover latent structures and hyper meters buried deep within the music of your favorite composers — including some patterns you hadn’t  even realized were hidden within your own music.

Remixes and mashups — Use HMMPatternGenerator to generate an never-ending stream of ideas for remixes (of one MIDI file) and amusing mashups (when you provide two or more MIDI files in different styles).

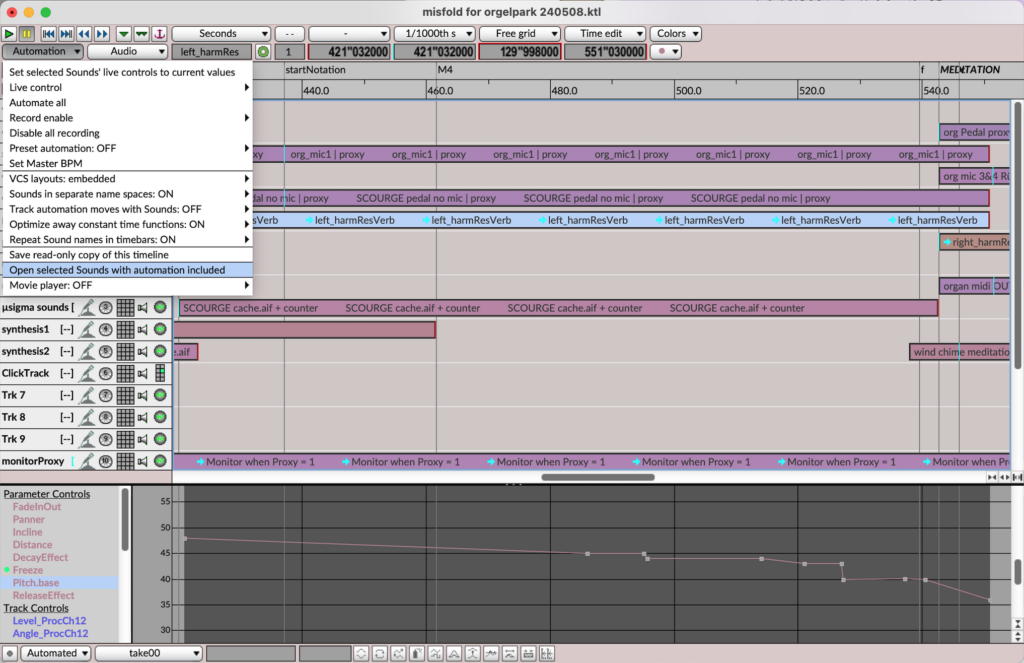

Galleries of possibilities — Select a MIDI file in the Kyma 7.25 Sound Browser and, at the click of a button, generate a gallery of hundreds of pattern generators, all based on that MIDI file. At that point, it’s easy to substitute your own synthesis algorithms and design new musical instruments to be controlled by the pattern-generator. Quickly create unique, high-quality textures and sequences by combining some of the newly-developed MIDI-mining pattern generators with the massive library of unique synthesis and processing modules already included with Kyma.

How does it work?

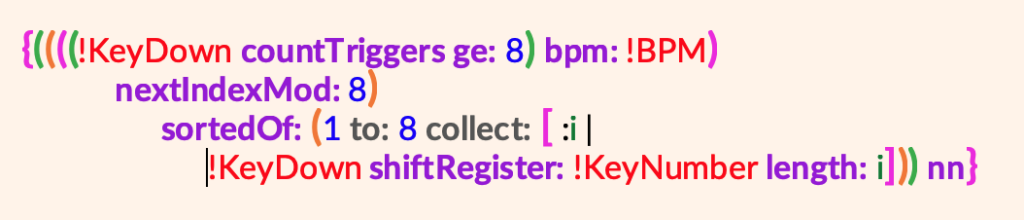

If each event in the original MIDI file is completely unique, then there is only one path through the sequence — the generated sequence is the same as the original MIDI sequence. Things start to get interesting when some of the events are, in some way, equivalent to others (for example, when events of the same pitch and duration appear more than once in the file).

HMMPatternGenerator uses equivalent events as pivot points — decision points at which it can choose to take an alternate path through the original sequence (the proverbial “fork in the roadâ€). No doubt you’re familiar with using a common chord to pivot to another key; now imagine using a common state to pivot to a whole new section of a MIDI file or, if you give HMMPatternGenerator several MIDI files, from one genre to another.

HMMPatternGenerator uses equivalent events as pivot points — decision points at which it can choose to take an alternate path through the original sequence (the proverbial “fork in the roadâ€). No doubt you’re familiar with using a common chord to pivot to another key; now imagine using a common state to pivot to a whole new section of a MIDI file or, if you give HMMPatternGenerator several MIDI files, from one genre to another.

By live-tweaking the strengths of three equivalence tests — pitch, time-to-next, and position within a hyper-bar — you can continuously shape how closely the generated sequence follows the original sequence of events, ranging from a note-for-note reproduction to a completely random sequence based only on the frequency with which that event occurs in the original file.

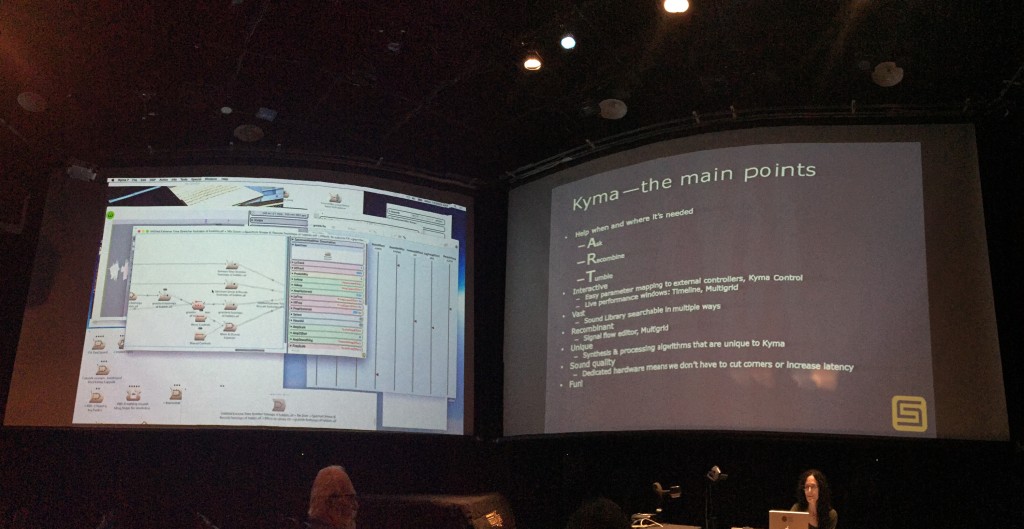

Other new features in Kyma 7.25 include:

▪ Optimizations to the Spherical Panner for 3d positioning and panning (elevation and azimuth) for motion-tracking VR or mixed reality applications and enhanced binaural mixes — providing up to 4 times speed increases (meaning you can track 4 times as many 3d sound sources in real time).

▪ Multichannel interleaved file support in the Wave editor

• New granular reverberation and 3d spatializing examples in the Kyma Sound Library

and more…

Availability

Kyma 7.25 is available as a free update starting today and can be downloaded from the Help menu in Kyma 7. For more information, please visit:Â symbolicsound.com.

Summary

The new features in the Kyma 7.25 sound design environment are designed to help you stay in the creative flow by adding automatic Gallery generation from MIDI files, and the HMMPatternGenerator module which can be combined with the extensive library of sound synthesis, pattern-generation, and processing algorithms already available in Kyma.

Background

Symbolic Sound revolutionized the live sound synthesis and processing industry with the introduction of Kyma in 1990. Today, Kyma continues to set new standards for sound quality, innovative synthesis and processing algorithms, rock-solid live performance hardware, and a supportive, professional Kyma community both online and through the annual Kyma International Sound Symposium (KISS).

For more information:

Website

Email

@SymbolicSound

Facebook

Kyma, Pacarana, Paca, and their respective logos are trademarks of Symbolic Sound Corporation. Other company and product names may be trademarks of their respective owners.

In 1999, astrophysicist/musician David McClain spent an intense three-month period working on The Northern Sky Survey, mapping the sky in the near infrared while getting by on an hour of sleep per night. When he finished the survey, he was suddenly struck by a viral infection that nearly killed him; his doctors were never able to determine the cause and, after three months, the infection dissipated almost as quickly as it had appeared. But afterward David noticed that he could no longer understand his wife when she was speaking to him. He went to an audiologist and discovered he had a sensorineural hearing loss of 60-70 dB in the high frequency range. Hearing aids helped him understand speech, but he was devastated to discover that music never sounded right through the hearing aids. But as a physicist, he was determined to solve the problem.

In 1999, astrophysicist/musician David McClain spent an intense three-month period working on The Northern Sky Survey, mapping the sky in the near infrared while getting by on an hour of sleep per night. When he finished the survey, he was suddenly struck by a viral infection that nearly killed him; his doctors were never able to determine the cause and, after three months, the infection dissipated almost as quickly as it had appeared. But afterward David noticed that he could no longer understand his wife when she was speaking to him. He went to an audiologist and discovered he had a sensorineural hearing loss of 60-70 dB in the high frequency range. Hearing aids helped him understand speech, but he was devastated to discover that music never sounded right through the hearing aids. But as a physicist, he was determined to solve the problem.